Taylor Swift Ai deepfake explicit photos flood social media, circumventing security measures.

This week, fake, Taylor Swift Ai deepfake sexually explicit photos that were probably created by artificial intelligence proliferated on social media, alarming fans and rekindling calls from lawmakers to defend women and take action against the platforms and technology that disseminated these kinds of images.

Before the user’s account was terminated on Thursday, one of the images they published on X had received 47 million views. Although X suspended multiple accounts for sharing the phony photos of Ms. Swift, the photographs persisted in spreading on other social media sites in spite of the removal attempts of the respective firms.

Pop star X’s supporters flocked the platform in protest, despite the company saying it was working to delete the photographs. In an attempt to obscure the sexual photographs and make them harder to find, they placed relevant keywords along with the statement “Protect Taylor Swift.”

The co-founder and CEO of Reality Defender, a cybersecurity startup that specializes in artificial intelligence detection, Ben Colman, stated that the company’s diffusion model—an AI-driven technology available through over 100,000 apps and publicly available models was used to create the images with a 90 percent confidence level.

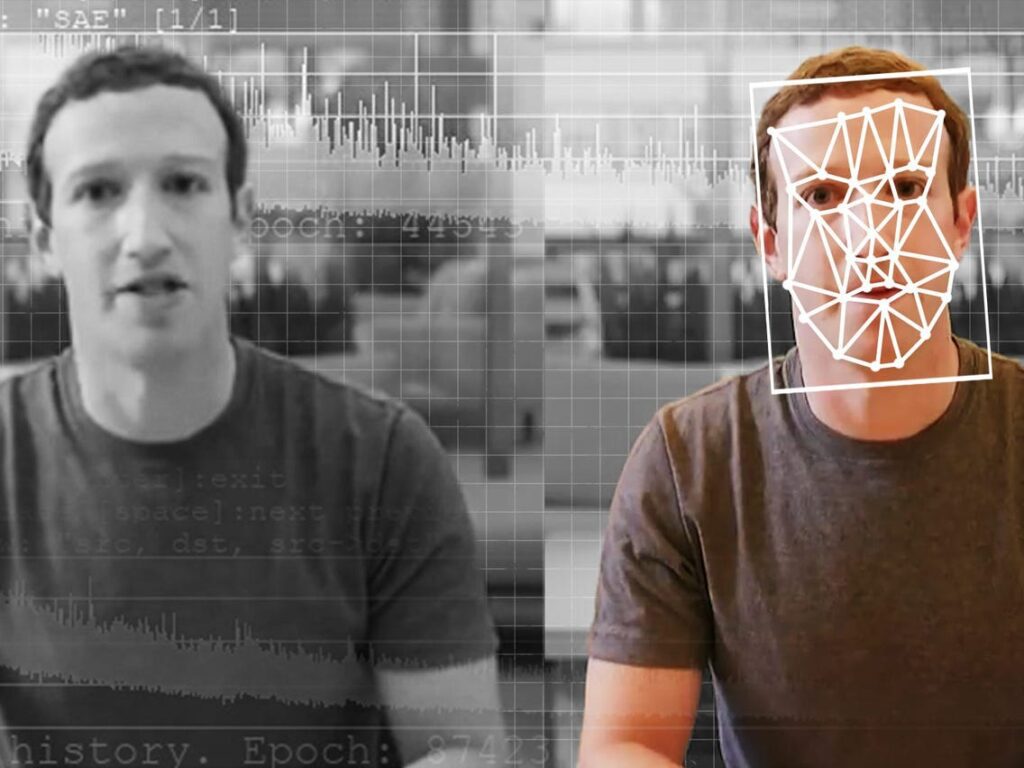

Companies have scrambled to offer products that let consumers create photographs, films, text, and audio recordings with simple prompts as the A.I. sector has exploded. The A.I. tools are very popular, but they have also made the creation of so-called deepfakes—people saying or doing things they have never done—easier and less expensive than before.

Researchers now worry that deepfakes might develop into a potent misinformation tool that would allow regular internet users to make inappropriately indecent photos or unflattering portraits of political figures. This month, Ms. Swift appeared in deepfake advertisements for cookware, and artificial intelligence was utilized to generate phony robocalls featuring President Biden during the New Hampshire primary.

“It’s always been a dark undercurrent of the internet, nonconsensual pornography of various sorts,” said Oren Etzioni, a computer science professor at the University of Washington who works on deepfake detection. “Now it’s a new strain of it that’s particularly noxious.”

An onslaught of these graphic A.I.-generated photos is imminent. According to those who created it, it’s a success, Mr. Etzioni stated.

X said that it would not tolerate the content like that at all. A spokesman released a statement saying, “Our teams are actively removing all identified images and taking appropriate actions against the accounts responsible for posting them.” “We’re keeping a careful eye on things to make sure that any more infractions get dealt with right away and the content gets taken down.”

Elon Musk purchased the site in 2022, and since then, X has experienced a spike in harmful content, including as hate speech, disinformation, and harassment. He has relaxed the website’s content policies and either fired, laid off, or accepted the resignations of employees who tried to get rid of offensive material. Additionally, the website restored accounts that had been blocked before for breaking guidelines.

People manage to get over the restrictions despite the fact that a lot of the firms who make generative A.I. tools forbid their customers from producing explicit imagery. “There’s an arms race going on, and it seems like every time someone creates a barrier, someone else finds a way to get past it,” Mr. Etzioni remarked.

A technology news site called 404 Media claims that the images came from a Telegram chat app channel that is specifically dedicated to creating these kinds of graphics. However, the deepfakes quickly gained widespread notice after being uploaded on X and other social media platforms.

Political and pornographic deepfakes are prohibited in several states. However, Mr. Colman stated that there are no federal regulations regarding deepfakes, and the limits have not had a significant effect. According to him, platforms have attempted to combat deepfakes by encouraging users to report them, but this strategy has not been successful. Millions of users have already viewed them when they are detected. He declared, “The toothpaste is already out of the tube.” Tree Paine, Ms. Swift’s publicist, did not immediately reply to messages left late on Thursday seeking comment.

The deepfakes of Ms. Swift spurred politicians to demand action once more. The dissemination of the photos is “appalling,” said Representative Joe Morelle, a Democrat from New York who filed a bill last year that would make distributing such images a federal crime. “It’s happening to women everywhere, every day,” he continued on X.

The head of the Senate Intelligence Committee, Senator Mark Warner, a Democrat from Virginia, stated of the photos on X, “I’ve repeatedly warned that AI could be used to generate non-consensual intimate imagery.” “This situation is appalling.” Democratic representative from New York, Representative Yvette D. Clarke, claimed that deepfakes were now easier and less expensive to produce thanks to advances in artificial intelligence. She remarked, “What has happened to Taylor Swift is nothing new.”

What are deepfakes ?

The term “deepfake” is the process of creating or manipulating content—usually videos or images—into the appearance of realism using artificial intelligence (AI) and machine learning techniques. The phrase “deepfake” is created by combining the terms “deep learning” and “fake.” Deepfake content is frequently produced using deep learning algorithms, especially generative models like Generative Adversarial Networks (GANs). Deepfake technology misuse can take many different forms, and it presents serious ethical, societal, and security issues:

- Information Manipulation: Using deepfake technology, it is possible to manipulate video or image information so that it appears as though someone is saying or acting in ways they never did. This could be abused to disseminate misleading information, disparage people, or harm people’s reputations.

- Political Manipulation: Fake videos of prominent personalities, influencers, or politicians making claims or partaking in fictitious actions can be produced using deepfakes. This can be used to cause strife or influence elections and public opinion.

- Identity Theft: By superimposing a person’s face on another in a video, deepfakes can be used to impersonate them. Identity theft may result from this, in which dishonest actors fabricate convincingly real phony videos to support scams.

- Disinformation and Fake News: By using deepfake technology, it is possible to produce interviews or news footage that appears authentic but never was. This may help fake news proliferate and make it harder for people to distinguish between manipulated and real content.

- Threats to Cybersecurity: Deepfake technology also puts cybersecurity at danger. For example, deep learning-based voice cloning can be applied to phishing assaults, in which perpetrators impersonate dependable people in an attempt to trick and control their targets.

- Privacy Issues: Since deepfake content is so easily created, privacy issues are raised. Individuals may inadvertently show up in altered material, which could negatively affect their personal and professional life.

A lot of work is going into creating techniques for identifying deepfakes and spreading the word about this type of technology. Deepfake technology is developing quickly, though, and society as a whole and tech developers must constantly work to reduce the possibility of misuse.

to read about How Taylor Swift spends her billions? visit: https://celebshotline.com/how-taylor-swift-spends-her-billions/

to learn more about deepfake and its associated issues visit:https://en.wikipedia.org/wiki/Deepfake